In today’s data-driven world, organizations constantly struggle with combining vast amounts of data generated from various sources into one consolidated data set. This unified view is essential for informed, data-driven business decisions. In part one of this series, we saw a high-level overview of the importance and methods of data reconciliation. Today, we’ll dive into some challenges that arise in this process and how we at CID address them. We’ll work with a concrete example use case to make it more tangible.

Imagine you’re working for an automotive manufacturer and have many suppliers you need to track in order to plan your manufacturing process efficiently and react to potential disruptions in your supply chain. For this purpose, you need an accurate database containing all your suppliers and information about how they relate to other entities, such as organizations and individuals. You already know your direct suppliers and have a database about them. But there is still some missing information about these suppliers, and you don’t know everything about the suppliers of your suppliers. So, you look for data providers that sell data about companies in your industry to fill these gaps. After some effort, you finally find some promising data providers, but just having this new data is not enough to get a complete picture of your supply chain.

To get the most insights from the data, you must enrich your suppliers with the new company data in a single data set. Since there are usually no standard identifiers between different data sets, you need to find some other solution to match them, i.e., an algorithm to identify the shared (and unique) entities and relations from your known data and the new one fully automated and on a large scale. This article will address some challenges in building such an algorithm and provide insights into a solution.

Data Quality Issues

Every new data source you acquire brings with it not only new knowledge but potentially also errors. Some of these errors might be impossible to find – after all, you cannot fact-check everything. However, other kinds of errors can be found by systematically checking assumptions about expected data types and value ranges in an automated fashion.

For instance, for a field containing a company’s inception date, you expect a date format or a value that can be transformed into a date format. Also, this date shouldn’t be in the future or too distant past. Moreover, a field with CEO information should point to an individual or contain a string that resembles a human name. Similarly, a field that represents the country a company is registered in should again contain an ID or be a string value that matches the name of a known country, etc.

All these expectations can be defined in a data schema you apply to all your data – Let’s explore this important concept in more detail.

Inconsistent Data Schemas

A schema is a blueprint of your data architecture, defining valid content like entity types, data types, or key-value pairs. Every new data source will have its own schema.

Revisiting the examples from above, the already mentioned date fields might be in different date formats, e.g.:

- YYYY/MM/DD

- MM/DD/YYYY

- MM.YYYY

- …

Some data sources might have information about a company’s CEO, while others have none or only more vague information like “associated people.” A country might be represented in different ways, e.g., “USA” vs. “United States of America” vs. “ISO 3166-2:US”, etc.

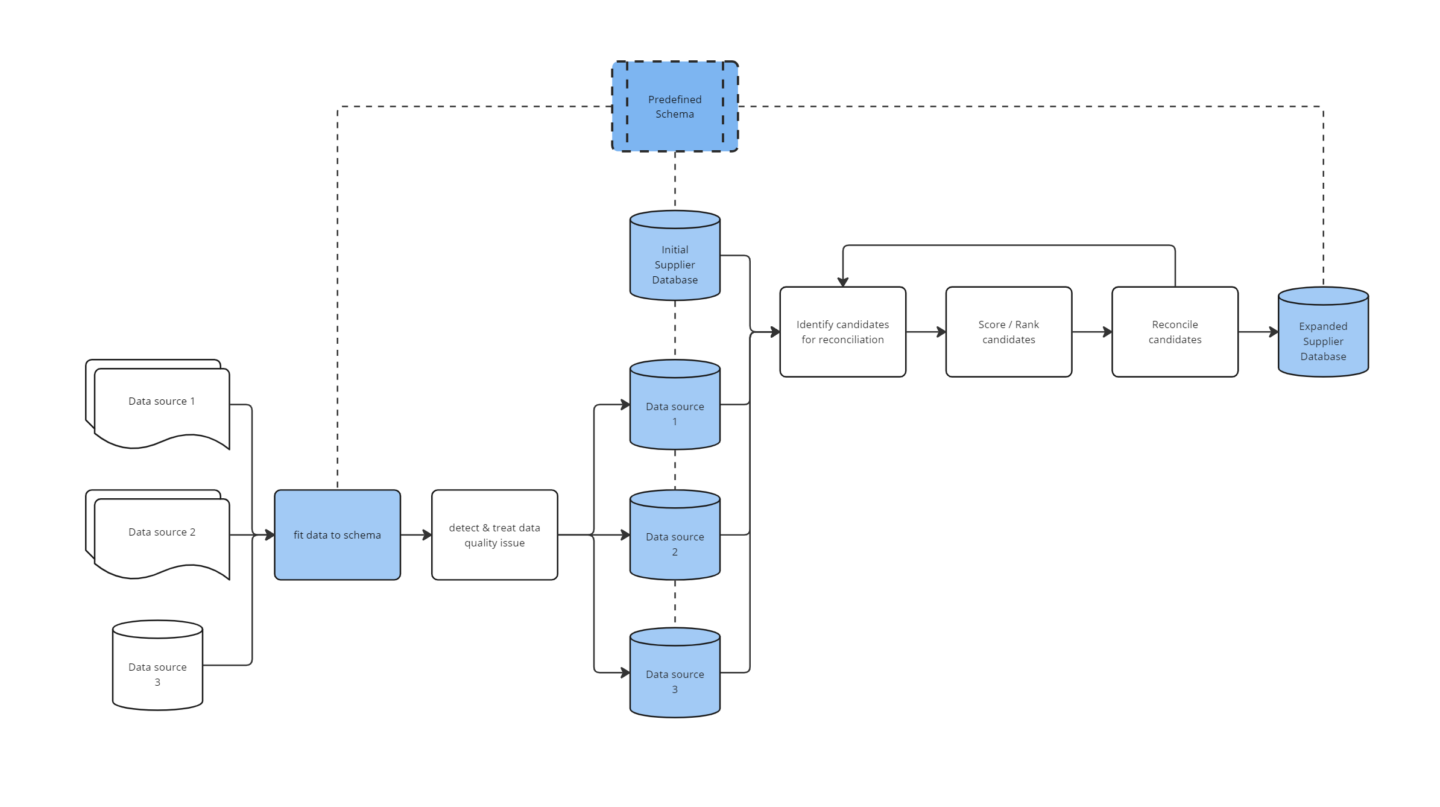

To combine multiple data sources, you must define a schema that can combine the information in each data source and transform the data from the sources to fit the schema. However, given that you have a concrete use case for your data, i.e., building up a supply chain database, defining your schema independently of any single data source makes sense. After all, your use case defines which parts of the data will be helpful and which can be ignored. Another advantage of this is that you won’t have to redefine your schema with every newly added data source.

Identify Match Candidates

One major task is to find candidates from all data sets that could be merged, i.e., entities that could be the same company. In the most straightforward case, you can find these candidates via shared external IDs, but most commonly, these don’t exist in all data sets, and you will have to consider other strongly identifying features. These features can, for example, be the name or website. It’s not sufficient to directly compare the features as they are since, e.g., the name of a company can be written with different capitalization, with or without legal forms, or additional information about the company. For instance, consider the following:

- “WISAG GEBAEUDEREINIGUNG NORD GMBH & CO. KG” vs. “WISAG Gebäudereinigung Nord”

- “RUESS GMBH, WÄSCHEREI, TEXTILREINIGUNG, MIETBERUFSKLEIDUNG W” vs. “Ruess GmbH”

Based on the name, they could refer to the same companies and thus should at least become candidates for matching. So, you will need to transform these features to find “identical” entries in different data sources. Finding possible candidates can be assisted by Natural Language Processing methods such as n-gram-based similarity scores.

Match Candidate Reconciliation

Once you have identified the reconciliation candidates, the next problem is deciding which candidate pairs are similar enough to reconcile. For this, you need to score or rank them using the given information. This score should depend on the nature of the features. For example, identical addresses provide more evidence for a potential match than only identical country information. However, the quality of the match should also have an impact. For example, a complex matching company name including a legal form (e.g., “Kayer Automotive Systems L.P.”) gives more evidence than one that only consists of an acronym (e.g., “KAS”). You also need to treat contradicting information and missing information differently.

Finally, you will reconcile the best-ranked candidate pairs and/or those scoring above a certain threshold to one entity. Adjusting this threshold and the feature weights allows you to balance precision and recall according to your requirements. Reconciling entities may lead to further candidates and evidence for even more matches because the sum of your information about an entity from two sources may be more considerable than the sole information from a single source. You can make use of this by applying an iterative approach for further reconciliation.

Both manual feature engineering and machine learning approaches can lead to a matching algorithm that provides good results while significantly reducing manual matching efforts. Thus, most (or even all) match decisions can be handled automatically, while only a few edge cases might need human intervention. New insights from these manual corrections can again be used to improve the algorithm by adjusting the model’s features or training the model on cleaner data. Thus, the degree of automation is successively increased.

Scalability & Complexity

Reconciliation decisions can become quite complex and potentially involve thousands of possible match candidates. Therefore, reducing complexity wherever possible and developing a reconciliation pipeline (see Figure) that can easily scale to many data sources and significant amounts of data is essential.

Working with a pre-defined schema helps reduce the complexity of the reconciliation problem since you don’t have to make significant adjustments with every newly added data source. Instead, you only need to do custom work to make new data compliant with the schema. After this, the steps towards reconciling the data are always the same. Therefore, the human effort involved in adding new data sources becomes feasible. This also scales better to large amounts of data than trying to match all aspects of every source.

Another issue you encounter when you combine more than two data sources is that the complexity increases when you simultaneously consider all match candidates from all sources. A good reconciliation algorithm can manage this. Restrictions might arise from the data size in contrast to your computational resources. But conceptually, you can quickly scale from two to n data sets while omitting unwanted effects that could arise from reconciling multiple data sources with an iterative approach. In other words, combining first A and B and then adding C might lead to different results than combining first B and C and then adding A. Thus, the solution has to be able to combine A, B, and C at once.

Conclusion – TL;DR

Reconciliation is a crucial step for ensuring the consistency and accuracy of your data. The goal is to define a single source of truth that will help you gain insights to make valuable business decisions. We at CID can help you define a customized data reconciliation process tailored to your business needs based on your available data and resources. Our aim is to achieve a high degree of automation while ensuring proficient data quality. We have years of experience in not only deciding on the big picture questions, such as how to organize data pipelines but also in the nitty gritty details of dealing with different data sources, such as defining the best schema for a use case and further finding an ideal weighting of different reconciliation features.