Introduction

Contemporary organizations’ business not only leaves a long trace of huge amounts of analytical data during value creation, but this analytical data is also used to generate value by supporting decision-making and optimizing processes. In many organizations, the availability and ease of use of analytical data are now crucial for success.

Of particular importance is choosing a data platform that suits the organization—in terms of its structure, employed technologies, workflows, and use cases for analytical data.

Strauss is a leading German brand manufacturer of work wear and protective clothing that sells its range via a web shop, catalog, and stores. In recent years, Strauss improved the agility and quality of the development of its operational systems through a transition towards decentralized and cross-functional development teams within its domains.

This also gave Strauss the chance to rethink its data platform, as the previously centralized approach did not align well with the decentralized ownership of operational systems and struggled with new sources of analytical data across domains and new use cases of analytical data. The Data Mesh architecture applies domain-driven design to analytical data and thus turned out to be an attractive approach for a new data platform.

Monolithic Data Platforms

Over the last decades, several architectures emerged that aimed to unlock the value of analytical data.

Data warehouses make analytical data available in a highly structured and integrated way. They are very useful for typical business intelligence queries but lack flexibility: new sources of analytical data need to be carefully integrated into the data warehouse. This can be limiting for unstructured data, which sometimes needs to be stored in its original form for machine learning or future use cases that are not yet known.

At Strauss, analytical data usage was limited to relatively static reporting use cases and supported by a few monolithic data warehouses maintained by centralized data warehouse teams.

Data lakes are a contrary approach to data warehouses. Here, analytical data is stored in its raw and often unstructured form, which facilitates the onboarding of new sources of analytical data. Use cases for analytical data are not limited by the rigid structure imposed by a data warehouse. However, the lack of structure is also a disadvantage of a data lake. Understanding of the stored raw data is easily lost, which slows down attempts to gain value from data. Moreover, governance can become a challenge.

Data lakehouses aim to be the best of both worlds. They combine a data lake with an integrated view that simplifies the use of analytical data and facilitates governance.

In recent years, the multimodality of analytical data, e.g., to support use cases that require near real-time streaming of analytical data, gained importance. Furthermore, data platforms are often implemented in a multi-cloud environment with on-premise and public cloud components.

A challenge for all three architectures is integrating analytical data from different sources (e.g., an operational system), particularly when the meaning and quality of the analytical data are determined in different parts of the organization and even more so in the face of inevitable change over time.

In a data lake, the responsibility to keep track of the meaning and quality of the raw data is on the side of each individual consumer. Consumers need to have an intimate knowledge of the processes that give the raw data its structure and meaning, and that also potentially limit its quality. They need to invest a lot of time to bring the raw data into a shape that supports their analytical use case. The communication with the owners of the raw data can become a bottleneck.

Data warehouses and lakehouses have central teams that have to keep track of all sources of analytical data to maintain the integration layer provided by the data warehouse or lakehouse for governance and to ensure that analytical data is used correctly. These central teams become a bottleneck over time as the number of sources of and use cases for analytical data grows.

The underlying problem is that the ownership of the analytical data is not determined by its origin, which determines its meaning, limitations, and evolution over time, but by a team responsible for a data platform technology. This dichotomy only intensifies in the face of multi-modal or multi-cloud data platforms.

At Strauss, it became difficult for the centralized data warehouse teams to maintain ETL pipelines extracting analytical data from operational systems of different domains and to make analytical data available in a way that bridges the gap between the intricacies of operational data and the expectations of BI users. The meaning and quality of analytical data got lost, new sources of analytical data were slow to onboard, and polysemes in different domains contributed to growing frustration and confusion in an environment with an increasing interest in autonomous self-service business intelligence.

Moreover, analytical data has become important for use cases other than business intelligence, such as near real-time processing, machine learning, and artificial intelligence, which require the multimodal availability of analytical data.

With the growing use of analytical data, the demands on data governance at Strauss also increased. One particular example is the European GDPR, which ensures the authority of the individual over personally identifiable information through methods like pseudonymization and the right to be forgotten. It became clear that data governance needs to take into account the domain-oriented decentralization of the origins of analytical data.

The Data Mesh Architecture

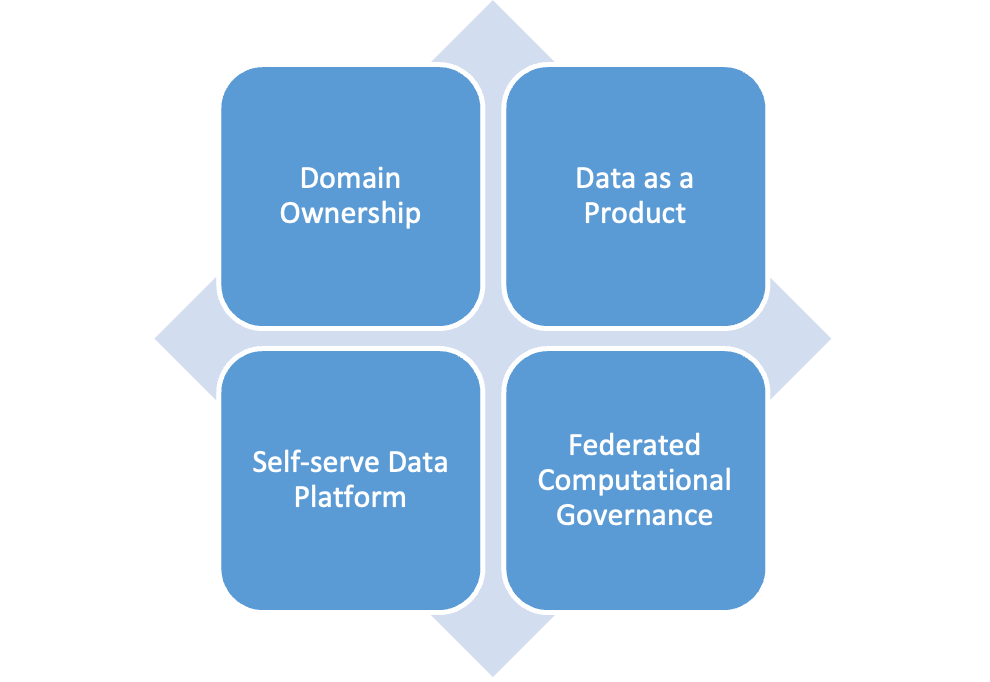

The data mesh architecture, introduced by Zhamak Dehghani in 2019, proposes a decentralized data platform where ownership of analytical data is not thought in terms of technologies and their experts but where analytical data is under domain ownership and managed by the same cross-functional development teams that also handle the operational systems and data of the respective domain.

Product thinking changes the perspective on analytical data as a by-product of the execution of the domain’s actual products to data as a product. Analytical data is intentionally shared by its domain in a way that maximizes usability by consumers. Similar to the API of a microservice, so-called data products are documented and versioned, guarantee certain service-level agreements about availability and data quality, and evolve over time, negotiating the needs of consumers to increase their value.

Governance prevents analytical data from being abused, e.g., by establishing rules about access control and regulatory concerns. On the other hand, governance needs to ensure that analytical data across domain boundaries is discoverable, understandable, multimodal, reliable, and composable so that the Data Mesh can actually be more valuable than the sum of its parts.

Federated computational governance takes into account that knowledge about analytical data and its use cases is decentralized and spread over domains. For agility and scalability, a fine balance between global concerns and domain autonomy needs to be maintained. This is only possible with automation: governance policies must be enforced automatically and monitored computationally.

The Data Mesh is implemented as a self-serve data platform. This platform provides APIs that abstract the complexities of the underlying data platform technologies, ensure integration between data products, and satisfy governance policies. It helps generalist developers from domains without a data engineering background share and consume analytical data.

It becomes clear that Data Mesh is not another data platform technology. It is rather an organizational change in the treatment of analytical data toward domain ownership, supported by standards, platform engineering, and abstractions over already existing data platform technologies.

A Data Mesh implementation cannot be a ready-made component. It requires knowledge of the organization and its domains, the workflows and CI/CD pipelines of domain development teams, and the technologies for analytical data that already exist in the organization or are considered. Current adoptions in companies like Zalando and Netflix realize the principles of the Data Mesh architecture in very different ways.

Due to the interdependency between organizational changes, use cases, and development of platform and standards, the introduction of a Data Mesh is not a one-time effort. It is a continuous and agile process that interleaves advances and adjustments in all its aspects in a strategic way. Due to its impact on the organization in terms of ownership, roles, and responsibilities, a strong long-term commitment of decision-makers is important as well. Fears of losing control in former centralized data platform teams and, in the domain development teams, fears about being overloaded with additional work requiring a new skill set need to be addressed. To address the first, it is important to point out that certain global policies of governance are actually crucial for a Data Mesh to fulfill its promises and that the self-serve data platform even brings new opportunities for the automation of governance. To tackle the latter, data products need to be acknowledged as equal citizens of software development, and the design of the APIs of the self-serve data platform should allow easy and flexible use by developers and integration into already established development workflows.

The Data Mesh at Strauss

The development teams within Strauss’s domains use domain-driven design to develop modern microservice architectures, which partly communicate using event sourcing via Apache Kafka. To a growing degree, the domain development teams use a GitOps approach to deploy and maintain their operational systems on their own Kubernetes clusters, which live on-premise in the CID cloud.

For self-service business intelligence, the use of the public cloud OLAP database solution Snowflake and the business intelligence tools Tableau and ThoughtSpot is established in an ongoing process that is coordinated with the development of the Data Mesh.

Integrating the self-serve data platform into the workflows of the domain development teams simplifies the onboarding of domain development teams, integration with already used monitoring, logging, and alerting solutions, and a close synchronization of the lifecycle of data products with the lifecycle of operational systems. Considering the familiarity of developers and business users with already existing data platform technologies like Apache Kafka and Snowflake, the Data Mesh supports first use cases based on these already existing technologies. However, the Data Mesh can also integrate new data platform technologies for storage and compute in the future whenever the need arises, be it in the private or in the public cloud.

One particular feature of the Data Mesh is that there is no unified access layer for data. Analytical data only leaves a storage technology like Snowflake when needed, and decisions by federated computational governance about, e.g., access control, are pushed down into the mechanisms of the individual storage technology. However, due to its extensibility, a unified query engine can be added to the self-serve data platform later.

Analytical data is shared between domains by so-called data products. A data product is a specialized API that shares analytical data in a standardized, discoverable, understandable, composable, and multi-model way through so-called output ports. A data product is implemented as a unit of analytical data, descriptive metadata, and transformations like, e.g., ingestion pipelines. Its individual components can be distributed over private and public clouds.

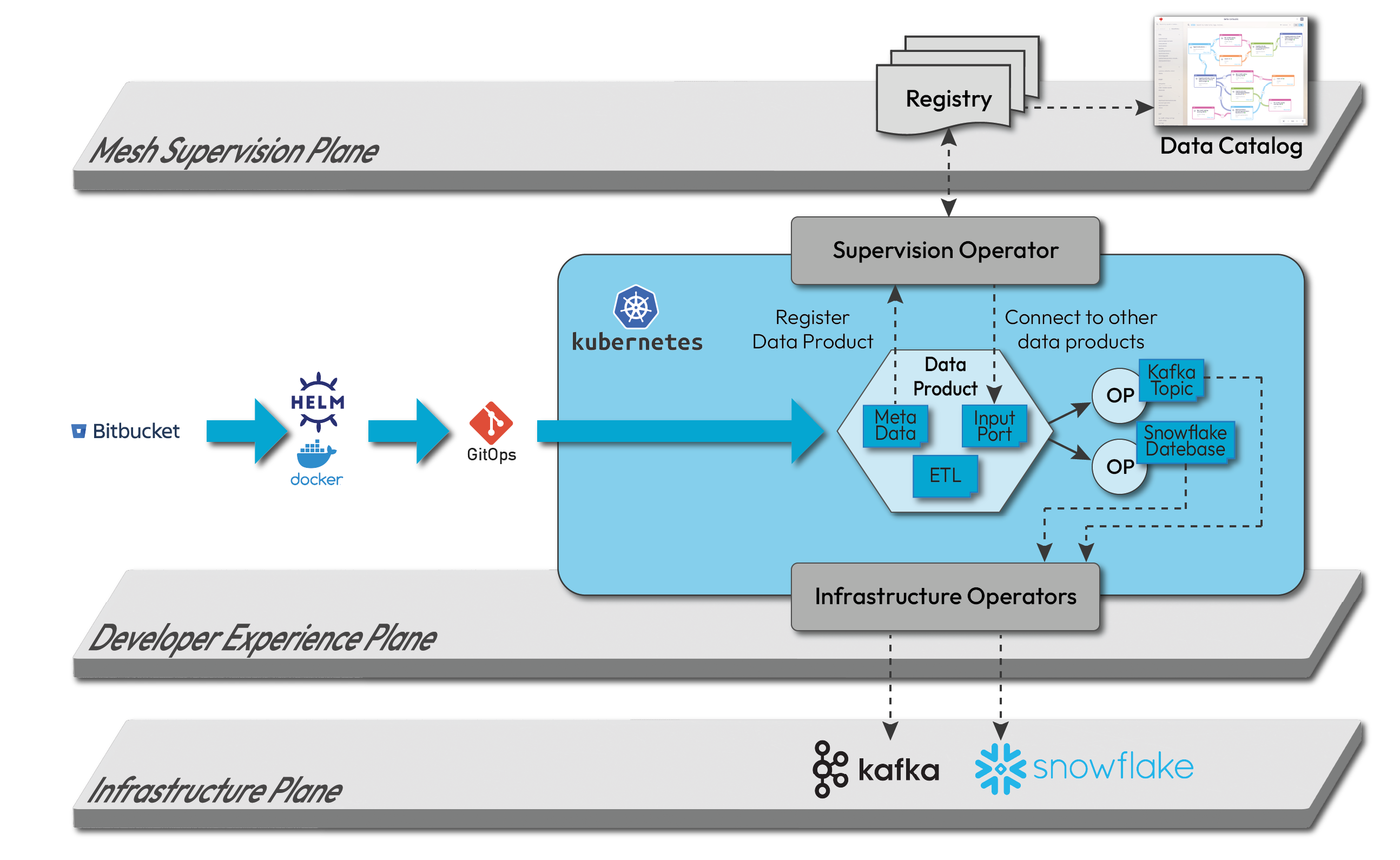

Conceptually, the Data Mesh has three planes with different concerns.

Developer Experience Plane

The developer experience plane offers the APIs of the self-serve data platform to domain development teams for the development, deployment, and maintenance of data products.

The central idea of the developer experience plane is to build on the experience of the domain development teams with Kubernetes. Custom Kubernetes operators offer the APIs of the self-serve data platform to developers as extensions to the Kubernetes API of their Kubernetes clusters. They provide a declarative and extensible interface to the self-serve data platform that can be combined with built-in APIs of the Kubernetes cluster as well as with other Kubernetes API extensions.

Using the same development workflow as for microservices in the operational systems of each domain, data products are developed in a Git repository, packaged as a Helm chart with associated Docker images, and deployed using GitOps.

Infrastructure Plane

Currently, data products store their analytical data in Apache Kafka and/or Snowflake. Transformations are either executed in Snowflake or on the domain development team’s Kubernetes cluster using various stream processing frameworks and workflow managers.

Technically, Snowflake and Apache Kafka are centrally managed – however, the Kubernetes API extensions that integrate them into the developer experience plane ensure proper isolation between domains and data products.

Mesh Supervision Plane

The mesh supervision plane keeps a central registry of all data products across all domains, according to a common metadata scheme. The registry feeds a searchable catalog of the Data Mesh and also allows data products to connect to each other and consume analytical data using the APIs of the underlying storage technology.

Data product developers use another Kubernetes API extension in the developer experience plane to register their data products on deployment, together with descriptive metadata, to declaratively control access to them and obtain connection information for other data products via so-called input ports.

Summary

In this article, we have shown that understanding Strauss’s needs and organizational and technological premises leads to the implementation of a highly scalable and extensible data platform.

The data platform follows the principles of the Data Mesh and reflects Strauss’s decentralized organizational structure. It not only improved data quality and semantics and removed central bottlenecks for new sources and use cases of analytical data, but it is also well integrated with the operational systems and their domain development teams.

One of the first use-cases supported by the Data Mesh at Strauss is a Data Mart providing an overarching view of stock that required integration of a large number of data sources across departments.

What are your organization’s organizational and technological premises, and how could a data platform tailored to your needs look? Contact us for a discussion!