Transforming a sizeable monolithic legacy application into a modern, distributed microservice system is complex and challenging. Before taking the first steps, the objectives for such a task should be well thought through, explicitly defined, and discussed with all decision-makers. Crucial questions to discuss are, for example:

- Is it to react more quickly to varying load scenarios?

- Is it for faster implementation and deployment of new features, as coordinating a bigger development team is difficult and time-consuming?

- Is it to improve resilience, or is scaling too expensive due to the monolith’s huge resource requirements?

- Are outdated technologies or libraries used in the monolith that are hard to replace but do worry your security department?

The objectives are crucial in the transformation process and serve as guidelines for the new architecture. Once they are well-defined, the next step is to get a complete understanding of the monolith’s functionality. Typically, legacy applications have grown over a long time, and there might be dead code paths due to changing requirements over time. So, another key point is to identify the features that are in use and essential and sketch a high-level business model from the code. You should also add values to each business feature and corresponding code to estimate and evaluate migration costs and their benefits. Is it worth supporting a given feature, or is its business value neglectable and bears no relation to the costs for modernizing, maintaining, and providing the feature due to its high resource requirements?

When transforming a legacy monolith into a modern microservice architecture, you need to know the key aspects to consider. This article discusses design goals related to microservices, design approaches for decomposing a legacy monolith into a series of smaller services and suggests a pattern for the actual implementation process.

Breaking the Monolith

Assuming all the non-trivial questions outlined above have been answered and all parties have agreed to the answers and objectives, the actual transition to a microservice can be planned.

But what is the appropriate approach to breaking a monolith into microservices? Let’s first discuss some common misconceptions.

The smaller, the better?

Hypothesis 1: As its name suggests, a microservice should be as small as possible, i.e., the smaller, the better.

Assuming this hypothesis is true, the ideal solution is splitting the monolith on package, module, or even on class boundaries. As a result, we get a myriad of tiny microservices that all must communicate over the network with each other. Apart from the fact that the amount of work involved in creating so many microservices is prohibitively high, the even worse problem is that the new design is exactly the same as the structure and program flow in the old monolith. The new architecture still has the same characteristics as the monolith, with all its shortcomings and problems actually causing you to switch to a microservice design in the first place. For instance, a significant change in one such tiny microservice will likely force you to update many other microservices, too, like in the monolithic case when introducing a breaking change at some interface, class, or module. On top of that, the new microservice architecture is even worse than the monolith’s, as it replaces fast in-process communication with slow network communication.

Clearly, the hypothesis from above can’t hold, and size should not be the deciding factor for splitting a legacy monolith.

Performance matters most?

Hypothesis 2: Legacy code should be broken up at naturally existing boundaries that promise the best performance as a microservice. The most suitable programming language should be used to maximize performance.

Let’s assume we have a large monolithic blog article hosting service that provides an API for processing images and texts.

- Images can be stored, scaled, converted, and modified by applying various filters.

- There is a semantic search functionality for finding similar images.

- There is an image-to-text operation to get a text description from an image.

- Texts can be stored and published as blog articles.

- There is a search engine functionality for finding similar texts.

- There is an option for spelling correction.

- There is a text-to-image function that generates an image based on a given text.

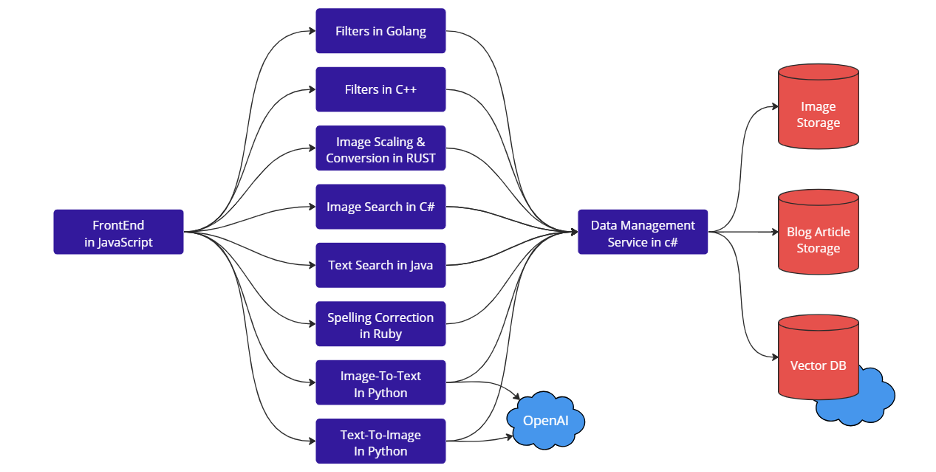

We could investigate the various functionalities and discover that some filter algorithms perform best when implemented in C++. In contrast, others require high parallelization and thus are best implemented in Golang. Image scaling and conversion are maybe memory-critical operations and, thus, best implemented in RUST. For the semantic search task on images, the monolith utilizes maybe a vector database hosted in Azure. Hence, a C#-microservice for accessing and managing the data provides the best performance, while the actual image-to-text functions benefit most from a microservice using Python’s AI capabilities.

We can do similar analyses for the text processing part to optimize performance per functionality and create a dedicated microservice using the programming language that provides the best performance.

To optimize data access performance and allow for strong data consistency among all services, we could use a single logical database for images and a single logical database for text, e.g., to avoid network overhead and enable joins over tables with data from different microservices.

A sketch of the microservices as described above could look as follows:

However, (even when neglecting the potential inter-service communication burdens between related services) this approach comes with major difficulties, too.

- Mastering all those coding skills requires highly specialized knowledge in a company (even in the modern age of AI coding assistants 😉). Potentially, dedicated teams per programming language and service need to be created. In such a case, developers cannot easily switch to other teams to help out or adjust team sizes according to business needs.

- Responsibilities are unclear. If, for example, a new filter should be implemented, which team should take over?

- Either teams and microservices cannot independently act as they have to at least discuss and agree upon external interfaces and data contracts, which means that dependencies similar to monolithic developments continue to exist. Or, even in a sequence of microservices that together implement a specific business logic, the microservices’ APIs can look completely different and use completely different terminologies, endpoints, naming, data transfer objects (DTOs), IDs, etc.

- Using a single database for all these services can also lead to enormous work for synchronizing and coordinating the required data storage formats (e.g., table design). Otherwise, data may be inefficiently stored, duplicated, and forwarded to services, even if they do not require all the data. Adapting such data formats later is again a costly operation.

- Some services might still be monolithic in nature and prevent you from achieving your desired objectives. In the example above, that might be the case for the data management service.

As a result, the dependencies between the microservices are just as high, and the development efforts are just as complex, if not more complex than with a monolith. Therefore, the second hypothesis cannot be upheld either.

What does really matter?

When designing a microservice architecture, the following core principles are crucial.

1. High Cohesion

A microservice should contain all strongly related code and comprise the entirety of all corresponding functionalities. If some of its functionality is changed, only the code within this microservice must be changed. If some functionality outside a given microservice is changed, the change should not affect the microservice at hand.

As described above, hypothesis 1 about the size of microservices contradicts this principle.

2. Single Responsibility Principle (SRP)

A microservice should encompass a single, well-defined responsibility or functionality of the underlying business logic. If some functionality needs to be changed due to a bug or new requirements, only the corresponding microservice needs to be changed.

Hypothesis 2 from above does not guarantee this principle. Some of the example services fulfill that principle, e.g., some dedicated filter services, but others potentially do not. For instance, the data management service handles access to image, text, and vector databases. It must translate potentially very different DTOs from various services to the corresponding specific storage backend queries.

3. Loose coupling

Microservices need to be only loosely coupled, i.e., dependencies between microservices should be as minimal as possible. Dependencies can exist not only due to explicit interactions but also implicitly due to common data contracts or deployment order requirements. Implicit dependencies can also be introduced by the need to share knowledge, discuss arrangements, and any other reason requiring multiple teams to communicate.

The size of a microservice does not matter, and it can vary among the various microservices that form a system together. Still, due to the SRP, their size will be much smaller by design than the original monolith.

Moreover, following all three principles when designing a microservice architecture makes your software more efficient, scalable, and maintainable.

- Efficient, as resources can be flexibly assigned to each service according to the actual needs of the corresponding functionality

- Scalable, as services with varying resource requirements can be individually scaled up and down by replication without affecting other services.

- Maintainable, as bugs and new features are local to a microservice that represents only a tiny subset of all the functionalities of the original large monolith, and other microservices can stay untouched.

Now that we know what to pay attention to, the question remains of transforming the monolith into a suitable set of microservices.

Design Approaches

No general template can be applied to split a legacy monolith into the correct set of well-defined microservices. The process is challenging and relies likewise on deep knowledge about the legacy service’s internals, the business logic that it needs to fulfill, the value each component contributes to the business, and experience in designing microservice architectures in general.

However, recommended paths can be followed and act as guidelines when breaking a monolith into microservices. One such path starts with identifying business capabilities.

Identifying Business Capabilities (BC)

Essentially, BCs describe what a business does to create value for the company and its customers. In this approach, the BCs are driving the boundaries of the microservices, and the decomposition is done from the business side. However, realizing this approach is not easy as BCs are best understood by businesspeople, whereas developers implement the architectural re-design. Hence, businesspeople and developers need to speak the same language and find a common understanding of each BC. Once identified, BCs can be the basis for dedicated microservices and a good start to designing the services. Still, BCs can lead to a too-rough-grained partitioning of the monolith and too-complex microservices. Therefore, a need to break them down further into smaller services might be necessary. One approach to finding an appropriate set of suitable microservices based on BCs is a Domain-Driven Design.

Domain-Driven Design (DDD)

DDD gives you principles and patterns at hand for developing complex domain models. A domain is a “sphere of knowledge, influence or activity.” The business capabilities typically match the domains at their highest level. However, with DDD and its strategic patterns, you can further divide complex business capabilities into smaller subdomains, break the unified model of a monolith into smaller, dedicated, and manageable domain models, and eventually define the boundaries of the microservices. DDD’s tactical patterns guide your software design of a microservice based on its subdomain model and lead to highly decoupled, coherent microservices. Moreover, DDD helps you categorize subdomains into three types (i.e., core, supporting, generic) based on their business value and complexity. This enables the prioritization of microservices and the evaluation of the value of in-house development versus using or licensing an existing solution.

Microservice Basics

There are many things to consider when developing a modern software architecture, especially a modern cloud-native or microservice system. The details are beyond the scope of this article. However, as I explicitly stated that the data management service from above is an issue, let us briefly discuss data management in the context of microservices.

Each microservice requiring access to data from an external storage backend should get its own dedicated database. In this way, data autonomy per microservice is guaranteed, and specialized database systems with independent schemas that fit individual needs or enable optimal performance are possible. It also further decouples services and allows teams to act independently, e.g., when changing data or schema. However, special care must be taken when multiple microservices require the same data and, therefore, copies or duplicates are created. The data should be owned by only one responsible team and microservice. The owner’s data should be the source of truth for all copies. If a microservice needs access to data from another microservice, it should always happen over a well-defined API and not by accessing the database directly. Consequently, this introduces a certain latency, and instead of strong consistency, an eventual consistency model is applied. In case of performance issues, caches can be used for improvements. Also note that in contrast to a system with a traditional, single database, the support for transactions and complex join operations is lost. This can introduce additional challenges when designing the new microservice architecture.

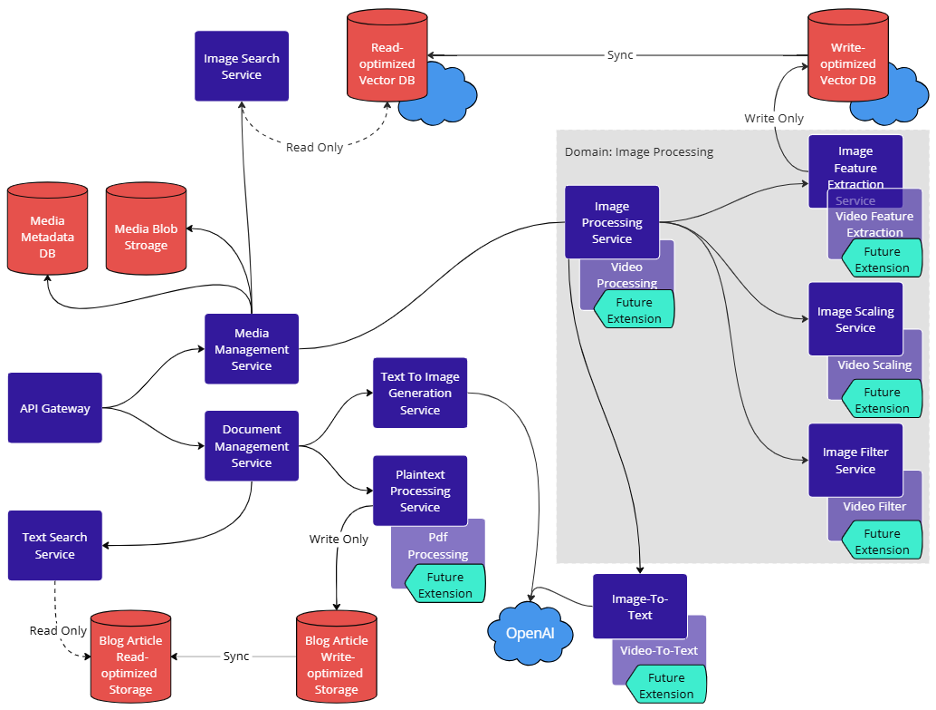

A modern microservice architecture design for the system described above could look like the following sketch. However, the sketch is just a made-up example. A real design depends heavily on the requirements and details of the underlying business capabilities and domains.

The services within the box “Domain: Image Processing” logically belong together. Depending on their complexity and requirements, they can be implemented as dedicated microservices or in-process functions of the same service. This sketch should provide an idea of a possible design and showcase its simple extensibility. For instance, the design can easily be extended to include microservices for video processing in the same way as for image processing and a microservice for PDF processing in the same way as for plain text processing. Specialized storage backends can be used for each microservice and use-case, e.g., either for optimized read-only or write-only access. The source of truth lies with the owner of the data, i.e., the services writing the data. Moreover, to counteract the problems mentioned in relation to Hypothesis 2, the programming language to implement a microservice should be chosen from only a small set of different languages.

From design to implementation

The design of a modern microservice architecture is only one big challenge. The next one is how to start its implementation and how best to transition from the legacy to the modern system.

If the legacy system is a complex monolith, replacing it with a new microservice architecture as a whole and in a single, colossal effort is not the best way to go. The time and costs of such an approach are simply too high. No new features could be implemented during such an implementation phase, or they would have to be implemented twice—in the legacy and modern systems. Estimating the additional time and effort this adds to the overall costs is difficult.

Strangler fig pattern

Instead, it is advisable to apply the Strangler Fig pattern. Here, microservices are brought to life one by one, replacing iteratively corresponding parts of the legacy system, which can be switched off accordingly bit by bit until the entire legacy system disappears and the new, modern system fully replaces it.

This ensures the continuous operation of your business while new features can be implemented within one of the new microservices. Moreover, certain problems with the legacy system can be solved quicker by replacing the corresponding parts with well-designed, modern microservices. This means your business is not interrupted, development can be done in parallel, and progress can be seen quickly and continuously.

Hence, after finalizing the design of a microservice architecture, the next important step is to identify and prioritize the microservices that will most benefit your business.

Microservices can be ranked according to the following characteristics of the code parts in the monolith which they should substitute:

- Frequently changing code: Code that is frequently touched often contributes most to business and benefits most from a modern re-design and the possibility of quicker re-deployments. Code that never changes requires no improvement!

- Code with high scalability needs: Horizontal scaling of corresponding microservices may be a solution to the resource bottlenecks of your monoliths and allow for flexible adjustments to use cases and load scenarios.

- Clean and coherent code: a good logical separation naturally defines the specific domain of a microservice.

In this way, microservices can be iteratively prioritized and implemented one by one until the entire modern system is completed.

Implementation Prerequisites

Another important question is how to ensure that the new microservices perform their intended functions correctly. Suppose the business logic is copied directly from the monolith. How can we guarantee that the reimplementation behaves exactly the same way, even when using new technologies and a completely different software design?

The key is good test coverage of the monolith’s code parts that will be substituted. By migrating tests (e.g., system, functional, acceptance, and, to some degree, integration tests) to the corresponding microservice where applicable, it can be ensured that the new service semantically behaves the same. This also means that if the test coverage is low, it is important to first write more tests for the monolith’s code.

Finally, before starting the development of a new microservice, a clean API needs to be defined, which can be used to intercept calls to components of the monolith and route them to the new microservice. In this way, new microservices can be integrated into the monolith’s operations, tested, and, if necessary, shut off again and improved before the corresponding code in the monolith is finally removed.

Ready to take on the Challenges?

Transitioning from monoliths with complex business logic to modern microservice architecture is an intricate undertaking. For designing the microservice architecture, it is necessary to analyze and understand the legacy monolith’s code, model, and business logic, to identify corresponding business capabilities and break them into domains and subdomains. The process involves many different roles in a company, from businesspeople and domain experts to developers, and requires an intensive communication phase even before the first thought can be given to the design. Getting a shared understanding and agreement on features and requirements is essential among all parties involved. Experience with appropriate technologies and knowledge about best practices, pitfalls, and potential performance bottlenecks are necessary for implementing modern microservices.

If you plan such a challenging undertaking in your company and are looking for support, we at CID have many years of experience and are ready to help. Feel free to contact us!